Creating Provably Correct Knowledge Products

The old cliché "Garbage in, garbage out," is precise when it comes to the creation of knowledge products; and data products and information products for that matter. Here is a quick refresher that explains the difference between these three types of products:

- Data product: a reusable raw and unprocessed data asset, engineered to deliver a trusted dataset to a user for a specific purpose.

- Information product: organized, processed, and perhaps even interpreted data which provides context and meaning.

- Knowledge product: refined and actionable information that has been processed, organized, and/or structured in some way or put into practice in some way making the information super-useful.

- Decision product: tell a business professional what they need to do or actually execute an action making use of the information of the decision product.

For a machine-readable information set to be useful, that information needs to be trustworthy. So what does that mean, “trustworthy data/information/knowledge product”? How can you prove such a product is trustworthy? How do you create such a trustworthy product?

There are two dynamics at play here which can be described by the rules of logical reasoning: validity and soundness.

Validity against a set of deductive rules allows for the the first aspect of the “trustworthy” stamp to be placed on a data/information/knowledge product. Saying this another way; trustworthy products can be provided only to the extent that deductive rules are provided to verify a product is valid. Such products are only trustworthy to the extent of the deductive rules used to prove that validity.

Deductive logic is precise because it provides certainty. Deductive rules supplies what amounts to a “template” of perfect and precise thinking for both humans and machines to make use of. Humans look at the data/information/knowledge visually; machines such as an intelligent software agent process the information to perform some task.

Soundness is another important factor in making use of data/information/knowledge products. Soundness is the measure of how precise deductive rules are with respect to the “reality” defined for an area of knowledge. A data/information/knowledge product can be provably valid per a set of provided machine-readable deductive rules that one trusts; but if that set of deductive rules does not reflect your “reality”; then automated machine-based processes might provide unexpected results when that data/information/knowledge is used.

The set of machine-readable deductive rules serve as a deductive "template" for creating the data/information/knowledge in the product.

An example of such deductive templates is provided by my Seattle Method guidance for creating a financial report. (Here is a working example in the form of my PROOF.)

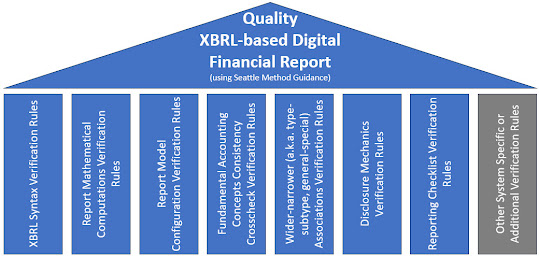

The document Understanding What Can Go Wrong helps you understand why each of the columns of rules exist. The document Essentials of XBRL-based Digital Financial Reporting provides a more detailed perspective.

These “columns” of the Seattle Method shown below provide the definitions of those deductive templates in the form of global standard XBRL-based rules that provide a provably trustworthy information such as a global standard XBRL-based financial report that is of professional level quality. Blind spots are eliminated and information products, such as these general purpose financial reports, quality levels can be moved from sigma level 3 which is about 6.7% defect rate to sigma level six 0.00034% defect rate.

It is important to grasp that the information is only as trustworthy to the extent of the provided rules. If you want more trustworthiness, then additional rules MUST be provided. The Seattle Method only specifies the obviously necessary keystones. For example, the Seattle Method does not provide a spell checker. If there are other blind spots that your system needs to eliminate, then additional categories of rules might be necessary.

These same ideas are provided in C. Maria Keet, PhD's book An Introduction to Ontology Engineering. Keet uses the terms consistency, precision, and coverage. (PDF page 23). Consistency seems to be equivalent to validity; precision seems to be equivalent to soundness; and completeness is about how thorough you are in terms of representing the deductive rules related to blind spot elimination.

These data/information/knowledge products can be taken to an entirely new level by turning them into an NFT. But, I will leave that discussion for another day.

Additional Information:

Comments

Post a Comment